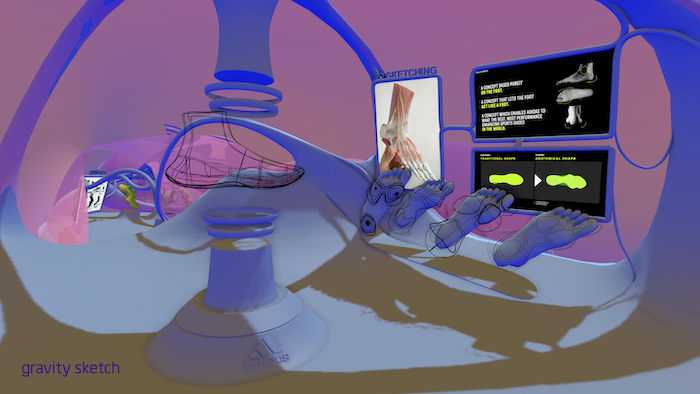

A new Adidas maker space—located inside a giant, digital sneaker—features virtual-reality 3D design tools for long-distance design collaboration.

Jean Thilmany, Senior CAD Editor

When Adidas designers were tasked with creating a seamless sneaker, they donned their Oculus headsets and got to work in the virtual world.

The team met regularly in a large, footprint-shaped studio that existed only in a virtual world entered through the Oculus. Of course, each team member was really in separate physical spaces, but with the help of the headsets and the immersive world, they felt as though they were meeting in real life.

The move dramatically slashed time spent creating an initial mock-up: from 21 days to less than one day, says Paul Sholz, Adidas senior footwear designer.

“In the design process, you create boards to inspire you and you brainstorm together. What we did in this virtual environment was the same, but we designed the actual product,” he says.

Scholz and his colleagues spoke in November 2021 at the online Around Conference. The conference sponsor, Gravity Sketch, makes a 3D-design platform hosted in virtual reality, which is the tool Adidas used to help design its Futurenatural shoes. The company gave the same name to its range of tools accessible within the immersive environment.

The one-piece, seamless sneaker line just debuted, about 18 months after the design team’s initial virtual meeting. The Futurenatural sneakers are molded rather than sewn. That is, the upper is fused to the sole with high pressure and heat to create what looks like one continual shoe, with no obvious break between the top and the bottom.

Traditionally, footwear designs often work in two-dimensions, extrapolating 2D lines to form lateral views of the proposed shoe. But building-out designs in the 3D virtual environment makes a mockup materialize more quickly, says Robert Stinchcomb, Adidas creative designer. He played a lead role in bringing the virtual system into the company.

“Now it’s down to showing up at work at nine and at 3 pm having a mockup at the point where you could see everything and talk about ‘let’s switch the layering here,’” Stinchcomb says.

The mockup is an early-stage design “almost like a napkin sketch,” he adds. “This is a place we sketch out designs before fleshing them out, before we make a sample. And we’re doing it in a room that is super collaborative where we can talk to each other even though we may not even be in the same country.”

The team can quickly come up with 10 or 15 sneaker concepts, says Arnau Sanjuan, Adidas design director, footwear innovation.

“It’s easy to see how designs would look, to play around with them, to brainstorm ideas together quickly,” he says.

The Futurenatural studio looks much like a virtual reality game. Designers move about in the virtual world—moving between a series of “stations”— the same way they would any virtual-reality game in which avatars work together.

The first stop is for design. Here, designers create the 3D model of the shoe. Surfaces are added at a second stop. Then it’s on to detailing and rendering. All before a physical prototype is created.

Because the shoe is easy to see and understand, the finished mockup can be immediately shared with manufacturers and marketing people for their feedback. They needn’t have an Oculus, as the designs can be captured and shared via other methods. Suggested changes are quickly made within the virtual environment.

James Harden’s foot

The mockup starts with the human foot. But for Futurenatural, the company took another tack. Like many shoemakers, the company had been using a generic last—the term for a 3D model of the foot—meant to represent the common sneaker wearer. For the Futurenatural line, Adidas wanted a better fit.

Adidas scanned thousands of people’s feet, including those of professional athletes. Of course, the popular shoemaker already had prints of athletes who have promoted their own Adidas sneaker in the past. James Harden, basketball player for the Brooklyn Nets is among those elite players. The Supernatural line debuted with the player’s fifth-signature basketball sneaker, Harden Vol. 5.

The engineers pulled together all types of feet—large sizes, small, narrow, wide, to best represent the foot. From that, they developed a new “last.”

Designers make their first foray into the Gravity Sketch virtual environment to fit the last with experimental sneaker concepts. Here is where they play with articulated lines in the 3D environment, rather than extrapolating view and fit from a 2D print, Stinchcomb says. They can rotate the view to see how the shoe would look, from the top, bottom, and sides.

At this first stop in their virtual environment, Stinchcomb and fellow designers work out new ideas for a sneaker’s footpad and play around with ways the upper might be molded and pressed. They sculpt arches and add padding to the sole in areas where the foot would benefit from reinforcement.

Collaboration is a key part of this design, with the designers talking back-and-forth in the virtual world as they gesture at parts and play around together with design, Stinchcomb says.

“We take a shoe and explode it and invite people into the space and spec out every single detail. We can blow it up to the size of warehouse and they can swim around the shoe, doing a deep dive on every part,” he says.

“At such an early stage, we can discuss complex details within the form,” he adds. In fact, these early iterations hold enough information to be fleshed out even further, which takes place at the next stage, or station: surfacing.

This is where the skeleton comes together and where volumes are defined, Stinchcomb says. Here, designers wrap their shoe to simulate the material they have in mind for end use. At this step, they create a continuous, lifelike surface with the help of SmoothKit software to sharpen effects.

The team also uses Adobe Substance Painter to “get the feel of the material” and to shade the image so it looks “as realistic as possible,” says Marius Jung, senior design.

Because the footwear industry makes heavy use of Adobe Photoshop and Illustrator, these new tools were a bit of a departure for the team, he says.

“In the past, we’d spend hours creating the right shadows and lighting, and now we’re able to speed that up and dive right into detailing like we’ve never been able to before,” he says.

When designers are satisfied with the shape and look of the shoe, they move to the next area within the virtual design space. At this juncture, they add details like laces and lace loops to their continuous surface. The team then renders the illustration with KeyShot software to give the image a photorealistic, lifelike quality.

At this stage, the team can share the image with other Adidas departments, mainly marketing and manufacturing. These teams offer their suggestions long before a final virtual prototype, much less a physical prototype, is created, says Marius Jung, senior designer. Their input is important, because the Supernatural line is a step apart from the usual. Designers need to know, and need to know early: can the manufacturer make a mold for this shoe using the designated materials? Will buyers be delighted or dismayed with this form for a new integrated sole?

Members of those teams can be invited into the virtual world if they have access to an Oculus. If not, the images can be shared on a desktop, Jung says.

Adidas worked with one of its factories to develop a new production process for the new shoe. During design, representatives from that manufacturer weighed in with tooling ideas. They also offered feedback about how they might produce the welting and lace loops. Marketers made suggestions brand placement and other features.

Mutual maker space

The Futurenatural design team had been working together almost a year in March 2020 when the COVID pandemic forced many companies to move employees to home offices. Some engineering and design businesses stuttered a bit as they found new ways to collaborate outside an office.

Even people regularly tied by collaboration software might have felt a hiccup as they accessed software on their home computers, in their home spaces. Meanwhile, he and his Adidas teammates stepped right back into their familiar space -the virtual office and maker space within the virtual shoe, says Arnau Sanjuan, design director of footwear innovation.

“I’ve always been one to be in the workshop figuring things out with my hands and working with materials,” he says. “I found my work in 3D could replace those things. We work together in that world so closely.”

Scholz too emphasized the inventive atmosphere that prevailed within the digital footprint.

“The virtual space kept the creativity and the spirit alive during the pandemic,” he says. “It’s just a fun, intuitive and playful way to create serious products.”

And that playfulness showed with the debut of the Harden Vol. 5 in January 2021 and the ensuing Futurenatural products, which feature polka dots, splotches and paint-like splurges in a number of patterns and colors, wavy soles, and an upper that melds seamlessly with the bottom of the shoe for an almost sock-like look.

In the future, the line is expected to include more materials and new designs. The shoes will, of course, be designed within the digital shoeprint using Gravity Plus 3D design technologies.

“The virtual reality system definitely demonstrated its value,” Sanjuan says. “Now everyone wants to try it. Because the learning curve is so easy, it’s spreading like wildfire to put 3D in anyone hands who wants it.”

Those newcomers are welcome, he adds.

“Especially at big, grand company like Adidas, it’s important to inject new processes into footwear and to look at things in a different way, Sanjuan says.