The conceptual phase of design is the only one within the product development window that must be inherently fluid, and in a sense, should be done in a leisurely manner. What, you ask? The word “leisure” is probably not used often when it comes to designing products, right? OK, let me explain.

In order to fully evaluate a suitable number of potential design concepts, engineers and designers must have the luxury of time. After all, how can you determine an optimum solution until you’ve discounted an adequate number of bogus ones? Unfortunately, not many of them get that time.

According to a conceptual design study conducted by PTC, 92% of respondents felt that their product development process would benefit tremendously from the ability to evaluate more concept ideas before moving forward into detailed design and documentation. Another 61% said that the concept design process is often cut short to due schedule constraints.

Time, after all, is critical to meeting design production schedules and shipping products on time. It’s the underlying reality of all those involved with product development.

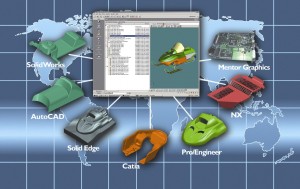

Which route to take: direct or feature-based?

Once a concept design has been approved and moved forward, time is of the essence. During our “The Pros and Cons of 3D Modeling Paradigms” webinar, one of the questions posed to our panel of speakers was in regards to what modeling paradigm is best in terms of time efficiency when moving from the concept stage to the documentation state, keeping in mind that a good percentage of the dimensions can be automatically generated within the history-based model. The answers were surprising and I thought worth sharing.

Dan Staples, vice president, Solid Edge Product Development, Siemens PLM Software

In a history-based system the dimensions are in the sketches and then those are retrieved into the drawing. In a direct modeling system, or at least in Solid Edge, the dimensions take the form of what we call PMI (product manufacturing information) or the 3D dimensions that are on the faces of the model instead of in the sketches. That doesn’t change the ability to retreat those into the drawing. The fact is that they’re on the faces instead of the sketches, same thing in terms of ability to retrieve those that are in the drawing and use them.

Brian Thompson, vice president of Creo Product Management, PTC

Yeah, I think if you have good workflows for creating or showing those dimensions in the 2D context, it could be similar in terms of efficiency to do either. I don’t see one modeling paradigm strongly standing out. There’s good efficient workflows for creating dimensions on models that have no underlying sketches, and there’s good workflows for showing them on models that do. As Dan and I have said, dimensions in the direct modeling environment can, in fact, still drive geometry if the user tells the system that’s what he wants.

You can still even get that behavior. Maybe not to the level you would get with a large feature-based, history-based parametric model, but you could still get that behavior. There may be some circumstances where one is slightly better than the other, but I’d say it’s fairly close in terms of efficiency to create that documentation. Would you agree Dan?

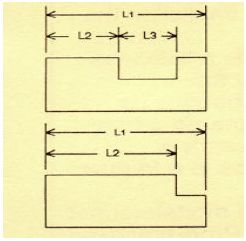

Dan Staples

I would actually say it’s somewhat more efficient. One of the constraints we forget about is that when you build up a history-based model, you build it up sketch by sketch by sketch. That’s not necessarily a natural way to dimension the part. In fact, it’s pretty bad practice in terms of a dimensioning scheme because you tend to have a lot more dimensional stack ups than you would like. Whereas if you’re in a direct modeler, you can put in dimension between two faces on the model that are far from each and there’s 50 features in between, and so you can actually have a much more natural dimensioning scheme that’s more immediately usable in the drawing when you see direct models in my opinion.

Brian Thompson

Yeah, I think we’ll agree there that bad modeling technique and your history-based parametric modeling will make it even harder in the drawing to do that. If you got a good, well-done history tree then maybe it’s not as hard but it’s a good point.

The bottom line

Though it’s not easy to sum up all the good points here, its clear the most time-efficient way to move your designs from concept to documentation is to use best practices when it comes to how you model your products and good dimensioning workflows. In other words, the use of good modeling techniques will always get you from Point A to Point B faster, whether you’re working in a direct modeling or feature-based modeling 3D CAD system.

If you missed the “Pros and Cons of 3D Modeling Paradigms” webinar, you can watch it in its entirety here.

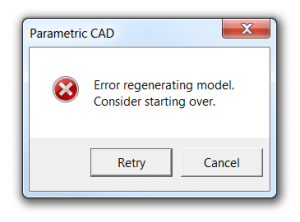

The model brittleness problem inherent with parametric feature-based modeling is a really big deal. And it’s something, honestly, that I don’t have a great answer for. I’ve even asked a few power users who I know, and their answers seemed to involve a bit of hand-waving, and a reference to having lots of experience.

The model brittleness problem inherent with parametric feature-based modeling is a really big deal. And it’s something, honestly, that I don’t have a great answer for. I’ve even asked a few power users who I know, and their answers seemed to involve a bit of hand-waving, and a reference to having lots of experience.

Direct modeling—a syncretic melding of concepts pioneered by CoCreate, Trispectives, Kubotek (and many others)–has shown the most promise to cure the parametric curse.

Direct modeling—a syncretic melding of concepts pioneered by CoCreate, Trispectives, Kubotek (and many others)–has shown the most promise to cure the parametric curse.