There’s been a big push by simulation software vendors to get engineers and designers to start incorporating analysis tools into their product development processes. High-end simulation tools have traditionally been used by specialists or analysts who’s jobs are to run design geometry–created by engineers–through their paces using analysis tools to validate that designs will be structurally sound and will operate as intended once built.

The motive is obvious. There are many more design engineers than there are analysts so making their products more engineering-centric opens up much bigger potential markets for simulation vendors. There are also, however, many compelling reasons for engineers to use analysis tools early in the design process. Doing so speeds up development, cuts time to market, and helps them identify potential design flaws long before costly physical prototypes are built.

New versions of Nastran solver released

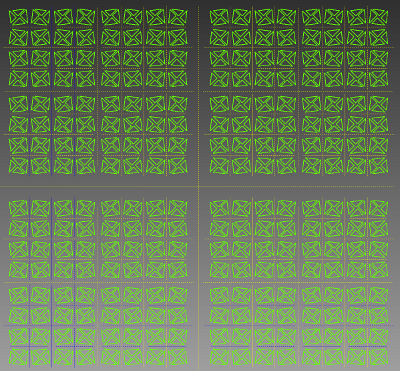

One of these high-end tools is Nastran, finite-element analysis (FEA) software now sold by Autodesk after its acquisition of NEi Software back in May. The goal of the acquisition was to expand the company’s structural analysis capabilities, and it follows similar strategic technology acquisitions in the computational fluid dynamics (CFD), plastics and composites solutions spaces.

Autodesk Nastran offers an industry-recognized FEA solver for analyzing linear and nonlinear stress, dynamics and heat transfer characteristics of structures and mechanical components. Nastran provides real-time results and changes in solution parameters while solving, which helps engineers and analysts gain accurate results to complex simulations.

Autodesk Nastran In-CAD 2015 is a CAD-embedded, general-purpose FEA tool powered by the Autodesk Nastran solver. The new Nastran In-CAD offers a wide range of simulation spanning across multiple analysis types, delivering another high-end simulation in a CAD-embedded workflow. The software works within both Autodesk Inventor and SolidWorks 3D CAD software systems.

Taking FEA to the Cloud

Autodesk Nastran Solver is available to customers using the Autodesk Simulation Mechanical and Autodesk Simulation Flex product offerings. Autodesk Simulation Flex, formerly Autodesk Sim 360 Pro with Local Solve, consists of:

* Autodesk Simulation Mechanical with cloud-enabled FEA tools for static stress, linear dynamic analysis and mechanical event simulations;

* Autodesk Simulation CFD Motion including Design Study environment and 3D CAD connectors with cloud-enabled CFD tools for fluid flow and thermal simulations; and

* Autodesk Robot Structural Analysis with cloud-enabled simulation for detailed analysis and code checking on a range of structures, including buildings and frame structures.

“We’ve been working with Autodesk tools since the acquisition of Algor and CFDesign and have seen first-hand how incredibly powerful the combination of strong numerical solvers and Autodesk’s advanced visualization, cloud and user interface tools can be,” said Dmitriy Tseliakhovich, Co-founder, CEO and CTO at Escape Dynamics. “Nastran is a great solver with very powerful non-linear and dynamic simulation capabilities so its integration with Autodesk’s front end and elastic cloud computing platform is extremely exciting.”

Autodesk Nastran and Autodesk Nastran In-CAD are now available. For more details about both products and licensing and pricing options, click here.

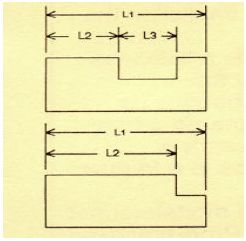

What is the failed promise of parametric CAD? In short, model reuse.

What is the failed promise of parametric CAD? In short, model reuse.

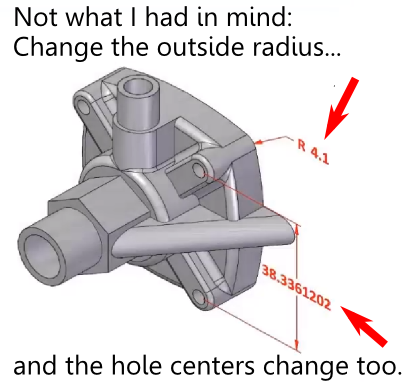

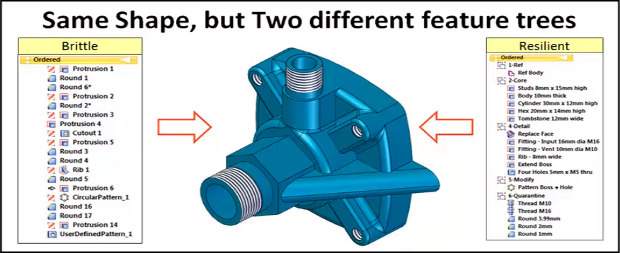

The model brittleness problem inherent with parametric feature-based modeling is a really big deal. And it’s something, honestly, that I don’t have a great answer for. I’ve even asked a few power users who I know, and their answers seemed to involve a bit of hand-waving, and a reference to having lots of experience.

The model brittleness problem inherent with parametric feature-based modeling is a really big deal. And it’s something, honestly, that I don’t have a great answer for. I’ve even asked a few power users who I know, and their answers seemed to involve a bit of hand-waving, and a reference to having lots of experience.

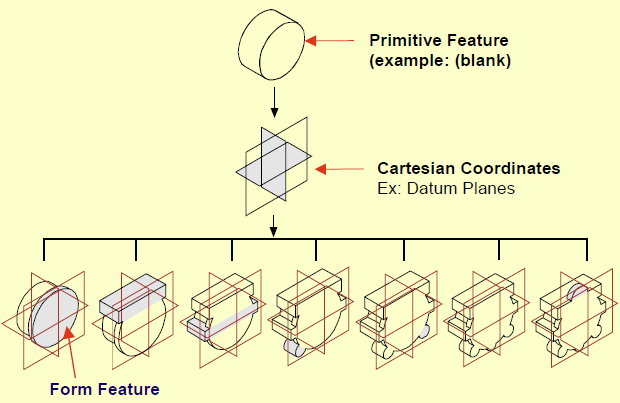

Direct modeling—a syncretic melding of concepts pioneered by CoCreate, Trispectives, Kubotek (and many others)–has shown the most promise to cure the parametric curse.

Direct modeling—a syncretic melding of concepts pioneered by CoCreate, Trispectives, Kubotek (and many others)–has shown the most promise to cure the parametric curse.